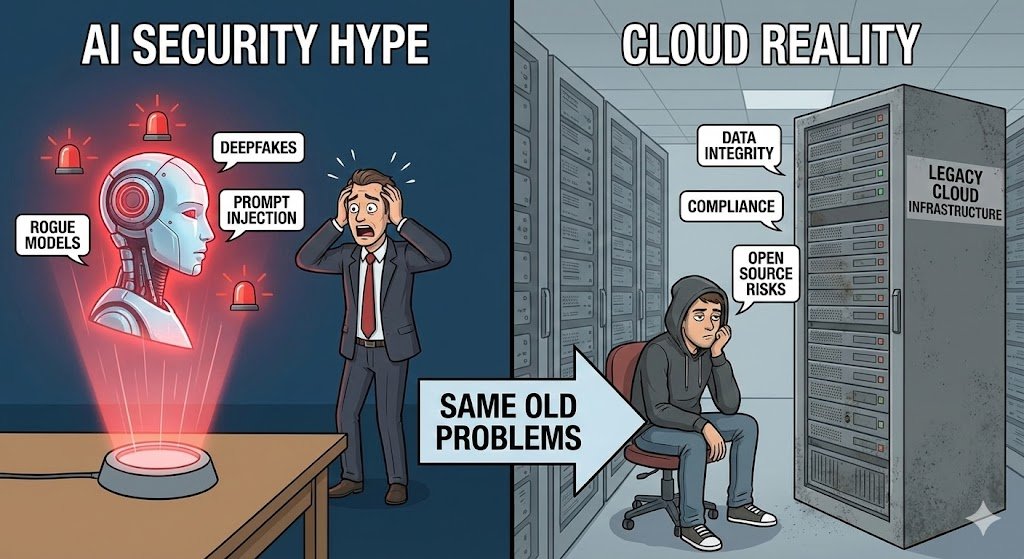

A new survey reveals that enterprises’ biggest AI fears aren’t about rogue models or hallucinations. They’re worried about the boring infrastructure underneath.

By Ali T.| Dec 23, 2025

Here’s the twist nobody saw coming: After two years of breathless hype about AI transforming everything from customer service to code generation, it turns out companies’ biggest security concern about artificial intelligence is… the cloud infrastructure it runs on.

That’s according to a new report from Palo Alto Networks, which surveyed 2,800 corporate executives and cybersecurity practitioners about their AI adoption anxieties. And while you might expect the top worries to involve deepfakes, prompt injection attacks, or models going rogue, the reality is far more mundane — and revealing.

Respondents’ biggest concerns? Cloud security. Data integrity. Regulatory compliance. Open source library risks. In other words: the exact same stuff security teams have been losing sleep over for the past decade, just with “AI” stamped on top.

“The attack surface, it turns out, hasn’t moved far,” Palo Alto Networks writes in the report. “It’s still grounded in cloud infrastructure.”

Meet the new stack, same as the old stack

This finding is both reassuring and deeply frustrating. Reassuring because it means companies don’t need to invent entirely new security paradigms from scratch. Frustrating because it suggests the enterprise security fundamentals that organizations have been struggling with for years — identity management, incident response, cloud visibility — still haven’t been solved.

And now they’re AI’s problem too.

The report’s recommendations reflect this reality check. Want to secure your AI systems? Start with the basics: treat identity management as a “tier-one security priority,” streamline your incident response procedures, and integrate cloud security into your SOCs. Sound familiar? That’s because it’s the same advice security vendors have been giving about cloud security since, well, cloud security became a thing.

Identity is the new perimeter (again)

The identity angle is particularly striking. More than half — 53 percent — of organizations in the survey identified “overly lenient identity management practices” as a top security challenge. That tracks with what other security firms have been shouting from the rooftops lately.

In November, ReliaQuest reported that nearly half of all cloud attacks it observed involved “identity-related weaknesses.” Rubrik recently went even further, calling identity “the primary attack surface” in cloud environments.

Translation: If an attacker can compromise credentials or exploit misconfigured permissions, they can waltz right into your AI infrastructure — not because of some sophisticated machine learning exploit, but because someone left the keys under the doormat.

The AI security reality check

What’s fascinating here is the gap between the AI security narrative and the AI security reality. The public discourse around AI safety tends to focus on exotic threats: models that manipulate users, training data that gets memorized and leaked, adversarial attacks that fool image classifiers. Those risks are real, and researchers are right to study them.

But in the enterprise trenches, the immediate threat isn’t that your chatbot will hallucinate sensitive information (though that’s a concern). It’s that the cloud bucket storing your training data has overly permissive access controls. It’s that your AI development team spun up compute instances without proper network segmentation. It’s that someone’s AWS keys got committed to a public GitHub repo.

This doesn’t mean AI introduces no new security challenges. The report also highlights concerns about training data integrity and compliance with emerging AI regulations — both of which are genuinely novel. But the foundational layer, the stuff that determines whether attackers can even reach your AI systems, is the same cloud security battleground organizations have been fighting on for years.

What it means for enterprises

For security teams, this should be simultaneously comforting and sobering. Comforting because you don’t need to throw out your existing playbook. Sobering because it means all those cloud security gaps you’ve been meaning to close? They’re now AI security gaps too.

The Palo Alto Networks report essentially argues that securing AI is less about understanding transformer architectures and more about getting the fundamentals right: lock down identities, monitor your cloud environments, have a plan when things go sideways.

Or, to put it more bluntly: You can’t secure what you can’t see, and you can’t protect what you haven’t properly configured. That was true before ChatGPT launched, and it’s true now.

The only difference is that the stakes might be higher — because the systems we’re building on top of that shaky foundation are more powerful, more autonomous, and increasingly central to how businesses operate.

Which means this is probably a good time to finally fix those cloud security basics. Before they become someone else’s AI security breach.

Ali Tahir is a growth-focused marketing leader working across fintech, digital payments, AI, and SaaS ecosystems.

He specializes in turning complex technologies into clear, scalable business narratives.

Ali writes for founders and operators who value execution over hype.